If you’ve been experimenting with local large language models, you’ve probably heard of Ollama. It’s a solid tool that lets you run AI models directly on your computer without needing cloud services. But let’s be honest – typing commands into a terminal isn’t for everyone. That’s why so many people are searching for Ollama alternatives that offer better interfaces, easier setup, or different features.

The good news is there are several excellent alternatives to Ollama available today. Whether you’re on Windows, Mac, or Linux, there’s likely a tool that fits your technical comfort level and needs perfectly. I’ve spent considerable time testing various options and want to share what I’ve discovered about the current landscape of local LLM tools.

Table of Contents

- Understanding Ollama and Why People Seek Alternatives

- Getting Started with Ollama on Different Systems

- Top Ollama Alternatives for Windows Users

- Excellent Ollama Alternatives for Mac Users

- Powerful Ollama Alternatives for Linux Environments

- Comprehensive Comparison of Ollama Alternatives

- Making the Right Choice Among Ollama Alternatives

- Answering Common Questions About Ollama Alternatives

- Final Thoughts on Choosing Ollama Alternatives

Understanding Ollama and Why People Seek Alternatives

So what exactly is Ollama? In simple terms, it’s an open-source platform that enables you to run large language models locally on your computer. The setup process involves downloading the software and then using command-line instructions to pull down and activate different AI models.

The appeal is clear – you get privacy since your data stays on your device, no subscription fees, and the ability to use AI even without internet connectivity. Ollama supports popular models like Llama, Mistral, Code Llama, and many others through its model library.

Despite these advantages, many users find themselves looking for alternatives to Ollama. The primary reason is the command-line interface. If you’re not comfortable with terminal commands, Ollama can feel intimidating and cumbersome. Even simple tasks like switching between models or adjusting settings require memorizing specific commands.

Another limitation is the lack of fine-tuning capabilities. Ollama works well with pre-trained models but doesn’t allow you to customize or train models on your own data. The tool also provides limited control over model parameters and quantization options, which can be frustrating for users who want more granular control over their AI experience.

Getting Started with Ollama on Different Systems

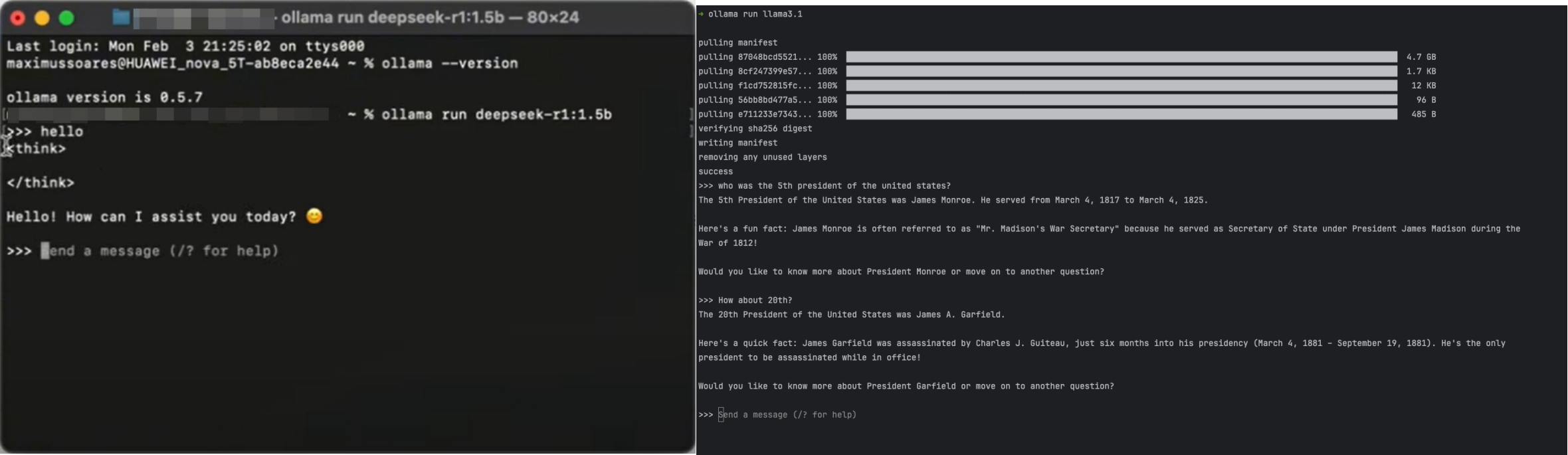

If you do decide to try Ollama, the installation process varies slightly between operating systems. On macOS, you download a .zip file from the official website, extract it, and move the application to your Applications folder. You then verify the installation through Terminal using the “ollama –version” command.

Windows users follow a similar process but download an .exe installer instead. After installation, you open Command Prompt and use the “ollama -v” command to confirm everything worked properly. From there, you can pull models from Ollama’s library using commands like “ollama run llama3.1” for the Llama 3.1 model.

The actual usage happens entirely within the command-line interface. You type your prompts and receive responses directly in the terminal window. While functional, this interface lacks the visual polish and convenience of modern applications. There’s no chat history, no easy way to export conversations, and no visual indicators of what’s happening during processing.

Top Ollama Alternatives for Windows Users

For Windows users seeking Ollama alternatives, one option stands out for its simplicity and power. The tool I’m referring to provides a complete graphical interface that eliminates the need for any command-line interaction whatsoever.

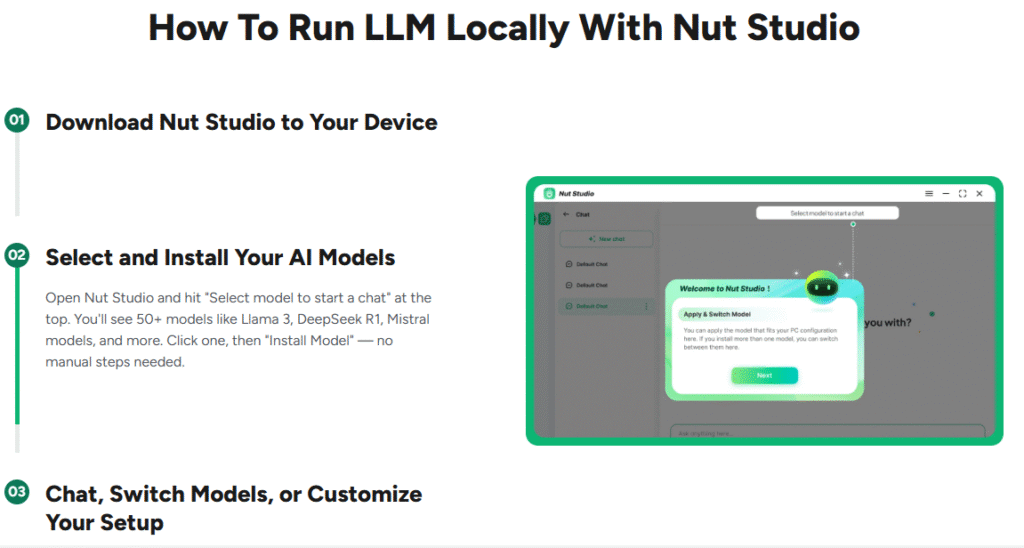

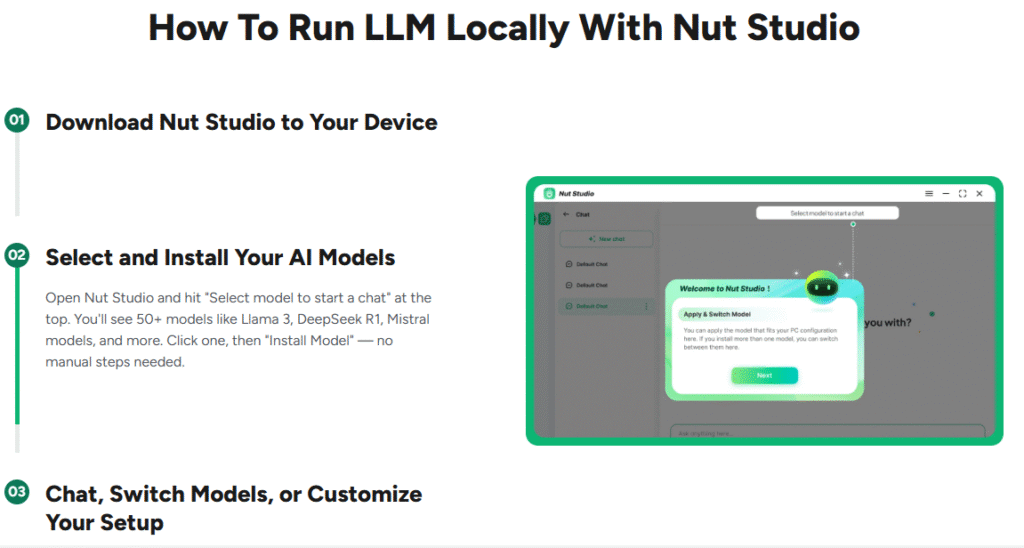

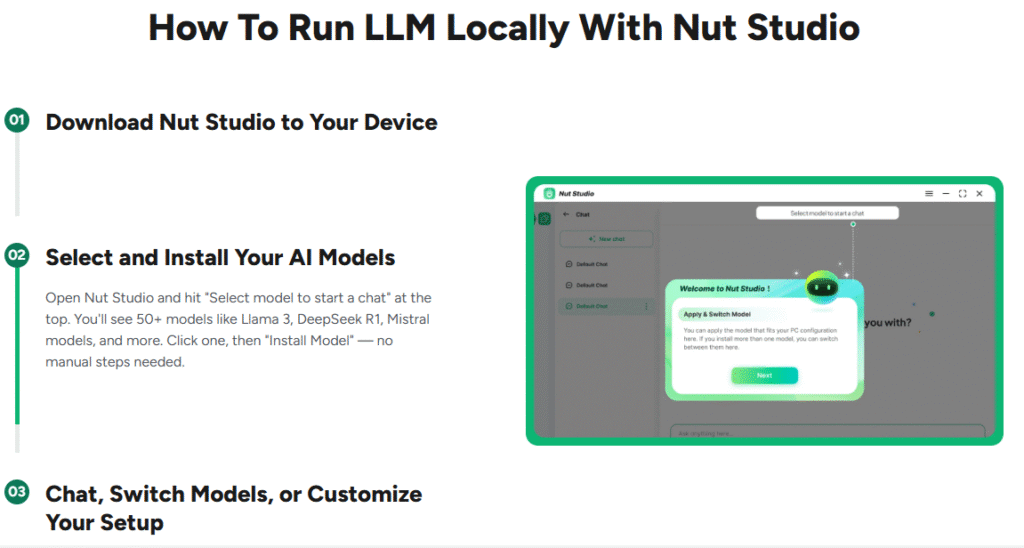

Nut Studio.Install Any Local LLM for Free with Just One Click.Nut Studio is a free LLM API that enables you to download and run 50+ AI LLM models locally with just one click, bringing AI to your desktop in seconds.One-click local deployment , no coding required — easy for anyone to use.Supports 50+ AI LLMs, including Deepseek, Llama, Mistral, and more — all deployed locally.Securely customize your AI models — process various file formats locally with full privacy.Use your AI assistant anytime, anywhere — no internet required, no latency.

Check More Details | Download Now! | Check All Deals

What makes this such an appealing alternative to Ollama is its focus on accessibility. The installation process involves downloading a single executable file and running it – no complicated setup, no dependency installation, no configuration files to edit. Within minutes, you can be chatting with local AI models.

The application supports over fifty different open-source models, including all the popular ones you’d expect like various versions of Llama, DeepSeek models, Mistral, Phi, and Gemma. The model library is integrated directly into the interface, so you can browse available options, read descriptions, and download them with a single click.

One particularly useful feature is the collection of pre-configured AI agents for different tasks. Instead of starting from scratch with each conversation, you can select specialized agents for coding assistance, creative writing, academic research, or casual conversation. These agents come with optimized system prompts that help the models perform better at specific tasks.

The offline capability is another significant advantage. Unlike cloud-based AI services that require constant internet connectivity, this tool works completely offline once you’ve downloaded your preferred models. This means you can use AI assistance during flights, in areas with poor connectivity, or simply when you want to ensure complete privacy for your conversations.

File processing capabilities add another dimension to its usefulness. You can upload documents in various formats including PDFs, Word documents, text files, and presentations, then ask the AI to summarize, analyze, or answer questions about the content. This transforms the tool from a simple chat interface into a powerful research and productivity assistant.

Excellent Ollama Alternatives for Mac Users

Mac users have their own set of compelling alternatives to Ollama that leverage the macOS ecosystem beautifully. One standout option provides a native Mac application experience with a clean, intuitive interface that feels right at home on Apple devices.

This particular tool connects directly to Hugging Face, giving you access to thousands of potential models beyond what’s available in Ollama’s curated library. The interface includes a model browser where you can filter options by size, architecture, popularity, or specific use cases. This discoverability aspect is a significant improvement over Ollama’s more limited selection.

The chat interface resembles popular web-based AI services, making it immediately familiar to most users. You get a proper conversation history, the ability to have multiple separate chats, and options to export your conversations when needed. The visual presentation is polished and responsive, providing feedback during model loading and response generation.

Customization options extend beyond what Ollama offers. You can adjust temperature settings, maximum token length, and other parameters through a graphical interface rather than command-line flags. The tool also supports system prompts, stop sequences, and other advanced features that give you finer control over model behavior.

For developers and researchers, the application includes an API server mode that lets you use your local models with other applications. This means you can have the benefits of local AI processing while using your favorite AI-powered tools that normally require cloud services.

Powerful Ollama Alternatives for Linux Environments

Linux users often have different priorities when it comes to local AI tools. While graphical interfaces are nice, performance and flexibility frequently take precedence. This is where tools like vLLM enter the picture as sophisticated alternatives to Ollama.

vLLM stands out for its exceptional performance characteristics, particularly when dealing with multiple simultaneous requests or long conversations. The secret sauce is its PagedAttention mechanism, which optimizes memory usage in a way that significantly improves throughput compared to standard inference engines.

Unlike Ollama and the other alternatives we’ve discussed, vLLM isn’t really a consumer application. It’s an inference engine designed to be integrated into larger systems or used by developers building AI-powered applications. The installation typically involves Docker containers and requires some technical expertise to set up properly.

The performance benefits become most apparent in specific use cases. If you’re building a application that needs to serve multiple users simultaneously, vLLM’s batch processing capabilities can handle significantly more requests per second than Ollama. For research purposes where you might be processing large datasets through language models, the speed advantage can save hours or even days of computation time.

It’s worth noting that vLLM really shines when paired with GPU acceleration. While it can run on CPU-only systems, the performance gap between CPU and GPU operation is more pronounced than with Ollama. This makes it particularly well-suited for workstations with capable graphics cards or server environments with dedicated AI accelerators.

Comprehensive Comparison of Ollama Alternatives

When evaluating the various alternatives to Ollama, it helps to compare them across several key dimensions. Each tool has particular strengths that make it better suited for certain users or use cases.

Interface design varies significantly between options. Ollama and vLLM both rely exclusively on command-line interfaces, which appeals to technical users but creates barriers for beginners. The Windows-focused alternative we discussed offers a completely graphical experience with one-click operations, while the Mac option provides a polished desktop application experience.

Model support follows different patterns across these tools. Ollama uses its own curated model library with simplified download commands. The Windows alternative includes a built-in selection of popular models with easy access. The Mac tool connects directly to Hugging Face for the broadest possible model selection. vLLM supports many of the same models but requires manual setup and configuration.

Performance characteristics differ based on the underlying technology. vLLM delivers the highest throughput, especially for batch processing, thanks to its memory optimization techniques. Ollama provides respectable performance for single-user scenarios. The graphical alternatives prioritize responsiveness and user experience over raw throughput numbers.

Nut Studio.Install Any Local LLM for Free with Just One Click.Nut Studio is a free LLM API that enables you to download and run 50+ AI LLM models locally with just one click, bringing AI to your desktop in seconds.One-click local deployment , no coding required — easy for anyone to use.Supports 50+ AI LLMs, including Deepseek, Llama, Mistral, and more — all deployed locally.Securely customize your AI models — process various file formats locally with full privacy.Use your AI assistant anytime, anywhere — no internet required, no latency.

Check More Details | Download Now! | Check All Deals

Ease of use is perhaps the most significant differentiator. The Windows-focused tool stands out for its simplicity – download, install, click, and you’re chatting with AI. The Mac application requires slightly more setup but remains accessible to non-technical users. Ollama demands comfort with command-line interfaces, while vLLM assumes substantial technical expertise.

Special features also vary between platforms. The Windows option includes pre-built AI agents and document processing capabilities. The Mac tool offers extensive customization options and API server functionality. vLLM focuses exclusively on high-performance inference without additional features. Ollama keeps things simple with just the core functionality.

Making the Right Choice Among Ollama Alternatives

Selecting the best alternative to Ollama depends largely on your specific needs, technical comfort level, and primary use cases. Each option we’ve discussed excels in different scenarios.

For complete beginners or users who simply want the easiest possible experience, the Windows-focused graphical tool is hard to beat. The one-click operation, integrated model library, and pre-configured AI agents remove virtually all barriers to entry. If your goal is to have a helpful AI assistant without dealing with technical complexities, this represents the most straightforward path.

Mac users who value interface polish and want access to a wide variety of models will find their dedicated alternative particularly appealing. The Hugging Face integration means you’re not limited to a curated selection, and the native macOS interface provides a seamless experience. If you enjoy experimenting with different models and appreciate good design, this is an excellent choice.

Developers and technical users working on Linux systems might prefer vLLM for its performance advantages, especially if they’re building applications that require serving multiple users or processing large volumes of text. The learning curve is steeper, but the payoff in throughput can be substantial for the right use cases.

Ollama itself remains a reasonable option for users who are comfortable with command-line interfaces and want a simple, no-frills way to run local models. Its straightforward model download process and clean (if basic) interface work well for individual use.

Nut Studio.Install Any Local LLM for Free with Just One Click.Nut Studio is a free LLM API that enables you to download and run 50+ AI LLM models locally with just one click, bringing AI to your desktop in seconds.One-click local deployment , no coding required — easy for anyone to use.Supports 50+ AI LLMs, including Deepseek, Llama, Mistral, and more — all deployed locally.Securely customize your AI models — process various file formats locally with full privacy.Use your AI assistant anytime, anywhere — no internet required, no latency.

Check More Details | Download Now! | Check All Deals

Answering Common Questions About Ollama Alternatives

Many people have similar questions when first exploring alternatives to Ollama. Let’s address some of the most frequent concerns and curiosities.

One common question is whether these tools require powerful hardware. The answer varies by application. The graphical alternatives are designed to work well on typical consumer hardware, including laptops without dedicated graphics cards. vLLM performs best with GPU acceleration but can run on CPU-only systems. Most modern computers can handle 7-billion parameter models comfortably, while larger models may require more RAM or GPU memory.

Privacy considerations understandably concern many users. All the tools we’ve discussed operate locally on your device, meaning your conversations never leave your computer unless you explicitly export them. This provides significantly more privacy than cloud-based AI services. The Windows-focused alternative in particular emphasizes its offline capabilities and local processing.

Another frequent question involves model compatibility. Most local AI tools use the GGUF format, which has become something of a standard for quantized models that can run efficiently on consumer hardware. Models from Hugging Face in this format typically work across different tools, though each may have specific requirements or optimizations.

People often wonder about the cost involved. All the alternatives we’ve discussed are free to use, though some may offer premium features or versions. The main “cost” is the storage space for model files, which can range from a few gigabytes for smaller models to dozens of gigabytes for larger ones.

The learning curve question comes up regularly. The graphical alternatives are designed to be immediately accessible to users of all technical levels. Ollama requires basic command-line familiarity. vLLM demands substantial technical expertise, particularly for configuration and optimization. Most users find they can become productive with the beginner-friendly options within minutes rather than hours.

Final Thoughts on Choosing Ollama Alternatives

The landscape of local AI tools has evolved significantly, offering compelling alternatives to Ollama for every type of user. Whether you prioritize ease of use, interface polish, raw performance, or specific features, there’s likely an option that matches your preferences.

For Windows users who want the simplest possible experience, tools like Nut Studio provide one-click operation that eliminates technical barriers completely. Mac enthusiasts have options that integrate beautifully with their ecosystem while offering access to a vast model library. Linux power users can leverage high-performance engines like vLLM for demanding applications.

The common thread across all these alternatives to Ollama is the ability to harness the power of large language models while keeping your data private and secure on your own device. As these tools continue to mature, we can expect even more refined experiences and capabilities.

The best approach is often to try a couple of options that seem to match your needs and technical comfort level. Many of these tools are free to download and experiment with, so you can get hands-on experience before committing to one particular solution. The right choice depends on your specific requirements, but with the current variety of excellent Ollama alternatives available, you’re almost certain to find something that works beautifully for your situation.

Some images in this article are sourced from iMyFone.

TOOL HUNTER

TOOL HUNTER